Best Big Data Platforms

-

PRODUCTS

-

BUYER'S GUIDE

Buyer's Guide

By Ritinder Kaur, Market Research Analyst at SelectHub

Big data platforms changed the software development landscape and challenged existing paradigms. Vendors scrambled to assemble applications capable of managing large, complex volumes and generating analytics insights.

Your systems may need a boost if you’re constantly playing catch-up with your digital assets. Our buyer’s guide will help you pick a best-fit big data solution to address your BI and analytics needs. Read on for desirable features, trends and handy selection tips.

Executive Summary

- Big data platforms help businesses achieve their business intelligence and analytics goals while managing large volumes of information.

- Determining your must-have features and devising a software selection strategy is an unmissable step of your purchase process.

- Data interoperability across systems, scalability and robust integration with fast-processing systems are critical big data software features.

- Centralized analytics is a thing of the past, with domain-driven data products and federated analytics changing how we do data pipelines.

- Prepare a list of questions to ask vendors before making a purchasing decision.

- What Are Big Data Platforms?

- Deployment Methods

- Benefits

- Implementation Goals

- Basic Features & Functionality

- Advanced Features To Consider

- Current & Upcoming Trends

- Software Comparison Strategy

- Cost & Pricing Considerations

- The Most Popular Big Data Platforms

- Questions To Ask Yourself

- Questions To Ask Vendors

- Next Steps

- Product Comparisons

- Additional Resources

What Are Big Data Platforms?

A big data platform is a software application to manage huge data volumes and types. They make up for conventional platforms unequipped to handle new data varieties and volumes.

Big data is a term for large datasets that are impossible to capture, manage or process through conventional databases. These include multimedia, sensors, IoT (Internet of Things) and streaming data.

Volume, variety and velocity characterize big data.

What else is new? Big data analytics platforms can differ mutually in how they manage, store and process information, especially in how they integrate with other applications and BI systems.

- One tool might process data at the source to save power, while another brings it into a data warehouse.

- Processing enormous workloads can be overwhelming for conventional systems. It spawned the launch of distributed computing platforms like Apache Hadoop and its next-gen version, Spark.

- Hadoop accelerates processing tasks by distributing workloads among its clusters of servers that run them in parallel.

- Every data platform offers some semblance of user autonomy. As a discerning buyer, match your users’ skills and needs to the platform’s self-service capabilities to find a good match.

Architecture

What does a big data platform’s architecture look like? Before a standard design was approved, industry software leaders — Oracle, SAP, Microsoft and IBM — proposed homegrown designs for big data systems.

These architectures were similar in design with the following components.

- A data sources layer

- A big data platform layer with integration, real-time analytics, security and governance

- An advanced analytics layer with cognitive learning for descriptive, predictive and prescriptive analytics

- Consumer applications that use big data analytics

National Big Data Reference Architecture (NBDRA)

To drive standard services globally, the European Commission proposed that a common architecture was a must for big data systems. Siloed software serves no one, binding consumers with specific vendors and blocking collaboration and innovation.

The National Institute of Standards and Technology (NIST) Big Data Public Working Group (NBD-PWG) sought to develop a comprehensive list of big data requirements for use cases in nine primary software domains.

- Government operations

- Healthcare and life sciences

- Commercial

- Deep learning and social media

- Research

- Astronomy and physics

- Defense

- Earth, environmental and polar science

- Energy

They proposed the Big Data Reference Architecture (NBDRA). It’s a common standards roadmap consisting of data definitions, taxonomies, reference architectures, and security and privacy needs.

The NBDRA is a living document, evolving with new developments in big data. Its components include the following.

- A data provider

- A big data system with collection, preparation, analytics, visualization, security and privacy compliance

- The big data framework provider with various technologies linked in a specific hierarchy

- Data-consuming applications or users

- A system orchestrator that organizes the data application activities into an operational system

- The systems and data lifecycle management layers.

Deployment Methods

Though many enterprises still prefer to keep sensitive data on proprietary systems, cloud solutions are popular. Private clouds provide the security and discretion of on-premise systems with fast computing speeds.

On-Premise

Many enterprises with legacy on-premise systems find it easier to maintain the status quo and prefer patching on a big data solution to their existing infrastructure.

Companies that set up on-premise solutions from scratch have their reasons, like wanting greater control over the infrastructure and data and possibly a strong IT team to back them up for maintenance and fixes.

But scaling to take on big data workloads can be tedious and resource-intensive.

Cloud-Based

Since scalability is at the core of big data analytics, cloud deployment is the go-to method for most big data needs. Besides software-as-a-service, big data providers offer cloud infrastructure and managed services to prepare and store your data.

These platforms offer:

- Extensive scalability.

- Zero infrastructure and maintenance costs. You only pay recurring subscription fees.

- 24x7 data accessibility from anywhere.

- Painless implementation and low cost of entry.

A downside is that you must rely on a third party for data security. However, many cloud software vendors provide robust encryption and regular penetration testing with annual audits, which should ease security concerns.

Hybrid

If you don't want to rely entirely on cloud providers for data governance and protection, best-of-breed hybrid cloud deployment might be the way to go. It’s a patchwork of multiple private and public cloud and on-premise environments.

And it’s the best of both worlds. You get the cloud's efficiency, flexibility and affordability while keeping data secure.

But hybrid adoption can be complex, needing careful planning, workload assessment and continuous optimization.

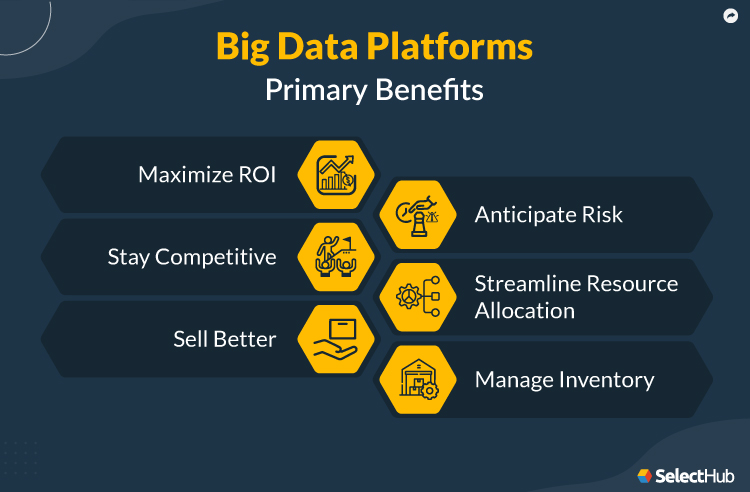

Benefits

When your digital assets are as multifaceted as big data, a platform that has your back can make all the difference. It assists operations and informs customer interactions, including outreach, support and sales.

When processes work smoothly, focusing on what matters becomes easy. You can see what’s working and do more of it. Besides, you can identify and remove blockers, turning them into opportunities.

- Maximize ROI: Accurate, timely data drives intelligent decision-making and empowers you to take advantage of opportunities. You can optimize returns by improving process efficiency and employee productivity.

- Stay Competitive: Market intelligence tells you when to diversify by launching new products. Uncovering unexplored customer segments can be rewarding, like Coca-Cola benefits from offering corn-free soft drinks during Passover.

- Sell Better: Customer analytics is the most significant advantage of big data mining. It supports personalized marketing via email outreach, discounts and loyalty programs.

- Anticipate Risk: Cut your losses by ditching products and campaigns that are money sinks. Invest wisely — decide when to sell/buy by tracking stock markets. Approve/deny loans to individuals/companies based on risk assessment.

- Streamline Resource Allocation: Plan for the next financial period by comparing the actual and estimated budgets. Allocate funds for expenses, manage the cash flow across departments, and support project planning and hiring decisions.

- Manage Inventory: Optimize stock levels with information on market demand. Keep carrying costs in check with intelligent route planning using location data and traffic updates. Big data can include addresses, zip codes and area names.

Implementation Goals

Assess internally why your company stakeholders want to implement a big data solution. What are their objectives? What do they hope to achieve?

Matching your requirements with features of shortlisted software can help you select a suitable solution.

|

Goal 1 Stay Competitive |

|

|---|---|

|

Goal 2 Improve Operations |

|

|

Goal 3 Boost Customer Satisfaction |

|

|

Goal 4 Manage Big Data |

|

|

Goal 5 Maintain Data Quality |

|

Basic Features & Functionality

Self-service, interactivity and customization options allow you to view, explore and discover metrics according to your role and requirements.

Which other features should be on your must-have list?

| Interoperability |

It allows systems to combine and use data freely, regardless of location and type. Your solution must connect to your preferred data sources and applications — vendor-provided SDKs (software development kits) help you do that. The big data tool must have the means to combine standardized data, which is where connectors play a role. |

|---|---|

| Data Storage Integration |

Big data platforms may or may not have storage, so they rely on external systems or additional modules. Hadoop Distributed File System (HDFS) supports Hadoop and Spark clusters. Ask potential vendors if your preferred systems have data storage integration. |

| High-Volume Processing |

Highly performant systems process data and scale fast, distributing workloads onto separate servers. Many such systems use machine learning to automate workflows. A trial run should tell you if the solution suffers from performance lags and latency issues. |

| Data Preparation |

Not every big data tool will have data prep capabilities — Hadoop doesn’t. But it has Hive, Apache Pig and MapReduce in its corner. Apache Spark enables data preprocessing before you aggregate it for reporting and analysis. Big data tools with data prep, cleansing and enrichment include Alteryx, Datameer and SAS Data Loader for Hadoop. Hadoop works seamlessly with Qlik Sense, Tableau and Alteryx Designer Cloud for generating visual insights. |

| Data Blending |

It’s the process of combining datasets from various sources for comprehensive insights. Spark has data blending built in, while Hadoop works with KNIME Analytics, Pentaho Data Integration and Tableau. |

| Data Modeling |

Building data models involves creating a concept map of how various datasets relate mutually. It helps organize data in repositories and assists in analysis and trendspotting. Entity-relationship diagram, data flow and dimensional modeling are relationship-mapping techniques. |

| Scalability |

Vertical and horizontal scalability — adding more power, storage and servers — keeps you worry-free when workloads increase. If your big data analytics platform doesn’t match your scalability requirements, leaning on a platform like Hadoop might be the next best thing. Additionally, techniques like data caching and query optimization keep your platform performant. Task processing in parallel accelerates big data analysis. Automatic failover and fault-tolerant processing are essential for 24/7 data availability. |

Advanced Features & Functionality

They might not be business-critical today, but investing in advanced features today can save you from inflated costs later.

| In-Memory Computing |

It’s a power-saving feature that allows storing data in the RAM rather than querying sources repeatedly, which is also draining for the database. Apache Spark stores data in memory and marks data points while computing instead of reloading from the disk again. |

|---|---|

| Stream Processing |

It’s the process of computing data as the system produces or receives it. The entire process of cleansing, aggregating, transforming and analyzing happens in real time. It’s an essential technology for analyzing sensor data, social media feeds, live videos and clickstreams. Spark has built-in streaming, while Hadoop relies on Apache Storm and Flink. Kafka is another streaming analytics platform. |

| Integration With Programming Languages |

Support for coding languages makes a big data tool a better choice for building custom apps. Many big data platforms have out-of-the-box Python, R and Javascript libraries. PySpark is a Python-Spark library. |

| Machine Learning |

Artificial intelligence (AI) and machine learning are efficient model-building assistants with automation and sophisticated algorithms. If your big data platform doesn’t have machine learning built-in, can it integrate with a solution that does? Hadoop relies on Apache Mahout for building and deploying ML models. Spark has a model-building ML library, MLib, with handy algorithms and tools. |

Current & Upcoming Trends

Edge Computing

Big data redefined computing to extend to edge devices. They include machines that collect real-world information — smartphones, home automation bots, sensors, medical scanners and industrial systems.

Amidst the deluge of big data information, businesses struggle with processing costs. Conveying data to warehouses and databases consumes time and power, while processing it at the source is cost-effective and faster.

Edge devices are optimized to incorporate low-bandwidth touchpoints and can operate without cloud infrastructure.

Manufacturing and telecom companies are leading in adopting edge technologies, with monitoring, response and site trustworthiness being their primary focus areas.

Artificial Intelligence

The hype about AI-ML and natural language processing is quite loud presently. They’re excellent equalizers, enabling anyone with basic computer skills to interact with data and gain helpful information.

Model training makes these technologies fast and near-accurate and allows open-ended exploration and data analysis. Benefits of AI-ML include outlier detection, pattern recognition and forecasting.

What-if scenarios and time series analysis enable glancing into the future and predicting what’s likely to happen with a fair degree of accuracy. Prescriptive analytics has raised the bar — the software suggests how to proceed to get the best possible outcome.

Despite these innovations, AI-related data security concerns are rising, and there aren’t enough guardrails yet. We can't decide whether we love or hate it.

Data Standardization

Standardized data facilitates software systems working together and using information interchangeably. Common definitions, terminologies and references driving big data, cloud, IoT and AI systems make for a globally connected digital system.

Interoperability is the property of digital resources to be accessible to the involved parties via standard definitions and formats. It instills confidence in users, provides open market access and encourages innovation.

The Big Data Working Group mandates that organizations maintain data consistency by adopting NBDRA-established standards. It significantly impacts how vendors develop software and innovate.

One such technology is the semantic metadata model — an index with references and dataset attributes that supports cross-platform integration and ubiquitous data sharing.

It’s why the data mesh is the talk of the town.

Data Mesh

Zhamak Dehghani, principal technology consultant at ThoughtWorks and creator of the “data mesh,” describes it as a decentralized approach to monolithic architectures.

Data lakes are centralized and generic, and a centralized analytics team can be a bottleneck, especially when scaling to meet additional workloads.

Domain-driven design is a necessity to accelerate data pipelines, she says.

A data mesh splits the architecture into “microservices-like” data products for storage, transformation and modeling. Each data product belongs to a separate team and serves a specific purpose in the analytics pipeline.

All components conform to quality and interoperability standards, with the respective teams owning data quality.

Data mesh adoption is still in its infancy. As Dehghani says, the technology exists. What enterprises are yet to achieve is a mindset shift.

Software Comparison Strategy

It can be overwhelming to make an informed choice, with many big data platforms having similar features. Your business is unique, so what works for others might not be the best fit.

How can you proceed?

Start by determining your business needs. Analyze where your current big data analytics solution falls short and how a new solution might help fill the gaps.

Check if the solution is performant while querying data.

- Is live source connectivity available, or is replication the only option?

- Check how live querying affects performance in tools that promise real-time insights. Querying data at source is resource-intensive, and most databases don’t stay open to live query code as it drains their power.

- The more queries there are, the greater the power consumption. Check with the vendor or test run the product to assess how performance varies with fluctuating workloads.

Here are some other considerations when evaluating big data analytics platforms.

- Integration with Hadoop is a primary requirement as its distributed file system tips the scales. Additionally, during your integration journey, you’ll encounter systems that work with Hadoop. Why not factor it in at the onset?

- Hybrid cloud solutions are worth considering, with many vendors offering tailor-made configurations.

- Archiving data consumes less storage than performing calculations, so assess your needs before settling on a storage capacity.

- Consider including in-memory analytics as it’s power-saving.

Vendor research is crucial and online reviews, industry guides and peer recommendations are excellent resources. Free trials are worth your time to check product usability and functionality.

Get started today with our big data platform comparison report to gain feature-by-feature insight into top big data software leaders.

Cost & Pricing Considerations

Flexible, tier-based pricing makes cloud solutions accessible, but consider user seats, computing speed and storage when calculating the total cost.

Pay-as-you-go models charge by usage, costing less per storage unit as consumption increases.

- Oracle Analytics Cloud Professional costs $16 per month, while the Enterprise edition comes at a monthly charge of $80.

- IBM offers a discount of 17% on your charges when you commit to a specific resource usage of their cloud services per month.

- One TB disk space for Hadoop costs approximately $1000 - $2000. A mid-range server can have 3 - 6 TB of disk space.

- Cloudera Data Platform pricing is CCU(Cloudera Compute Unit)-based. The vendor separately prices data engineering, operational database, machine learning and data hub.

24/7 email and phone support is likely to cost extra. Additionally, it’s better to factor in the cost of data migration, deployment, customization and add-ons at the onset.

The Most Popular Big Data Platforms

Jumpstart your software search with our research team’s list of the top five big data platforms.

Oracle Analytics Cloud

What It Does

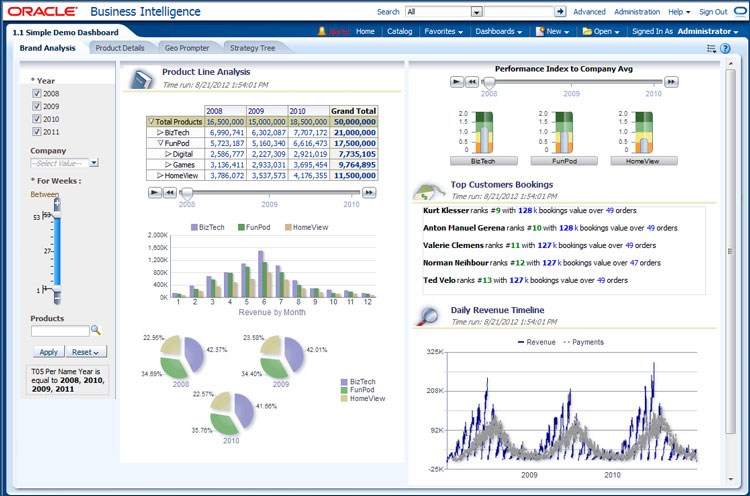

Oracle Analytics Cloud is a big data platform with extensive source integration via over 45 data connectors. OAC highlights dataset correlations across visualizations on the same dashboard with a brushing feature.

Intuitive dashboards address the last mile of analytics in Oracle Analytics. Source

The system delivers meaningful insights on demand by processing natural language. Additionally, it generates best-fit visualizations using machine-learning recommendations.

Product Overview

| User Sentiment Score | 84% |

| Analyst Rating | 96 |

| Company Size | S | M | L |

| Starting Price | $16/user/month Source |

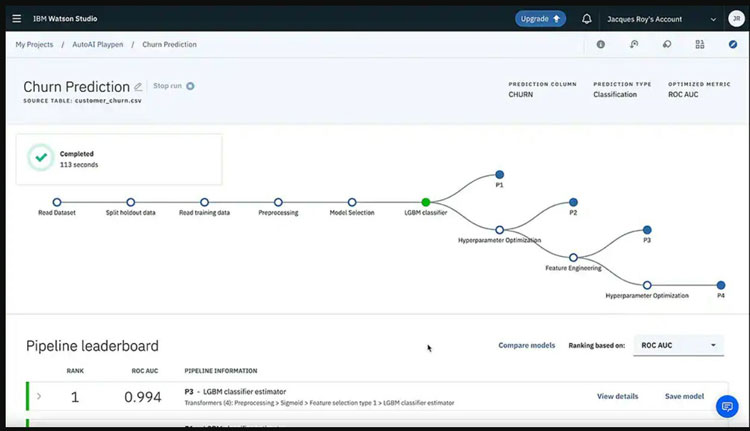

IBM Watson Analytics

It’s a data science solution that with natural language processing. The vendor evolved it from a question-answering (QA) computing system to a prescriptive system. It relies on Hadoop for data processing.

IBM Watson Health has over 100 techniques for analyzing language, recognizing sources, finding evidence and scoring it to rank possible hypotheses and present them to the user.

A 30-day free trial is available.

Predict churn rate in IBM Watson Analytics.

Product Overview

| User Sentiment Score | 84% |

| Analyst Rating | 95 |

| Company Size | S | M | L |

| Starting Price | $140/month Source |

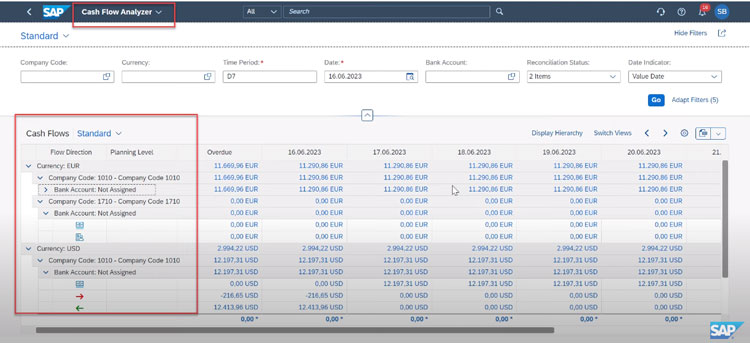

SAP HANA

It’s the vendor’s in-memory database and development platform with robust data processing and real-time analytics. Processing data in memory helps avoid time-intensive aggregations. SAP HANA workflows run seamlessly on Azure.

It provides database services to SAP Analytics Cloud, SAP Data Warehouse Cloud, SAP Business Applications and third-party platforms. Besides data preprocessing, columnar storage, data aging, dynamic tiering and data recovery are available.

Analyze cash flow across periods, accounts and liquidity items with SAP HANA. Source

Product Overview

| User Sentiment Score | 86% |

| Analyst Rating | 92 |

| Company Size | S | M | L |

| Starting Price | Available on request |

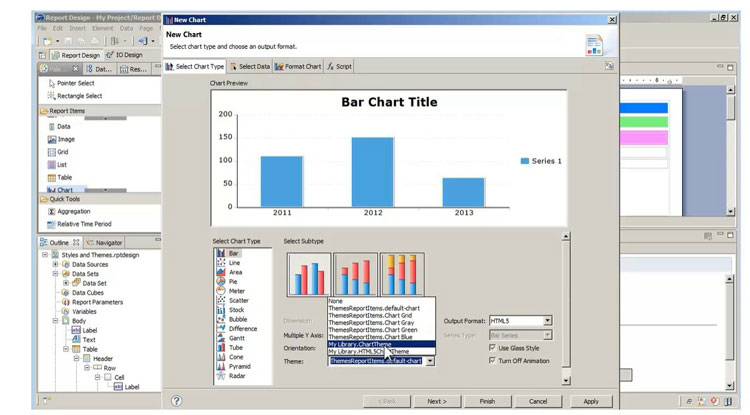

BIRT

It’s an open-source reporting and BI solution on Eclipse IDE for Java and Java EE rich-client and web programs. A visual designer addresses enterprise reporting needs with a built-in charting engine and runtime component.

BIRT supports reusable queries, crosstabs and combining data from multiple sources within a report.

BIRT Report Designer has a rich chart library out of the box.

Product Overview

| User Sentiment Score | 80% |

| Analyst Rating | 90 |

| Company Size | S | M | L |

| Starting Price | $30/month Source |

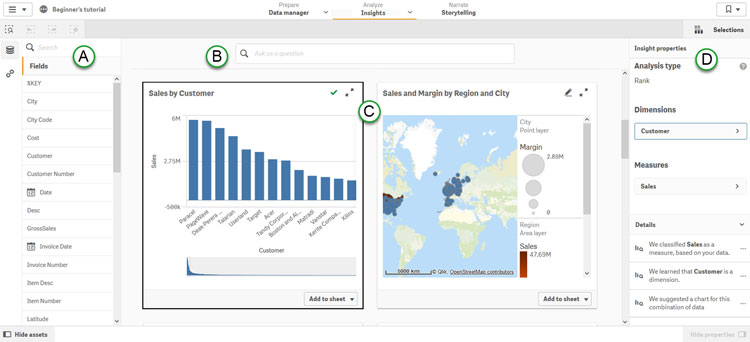

Qlik Sense

It’s a big data analytics and app-building platform with pre-built analytics components and the option to design custom modules. Its sources include files, websites, applications and big data systems like Hadoop, AWS and Azure.

Qlik Attunity keeps data synced across sources, databases, warehouses and data lakes. Qlik Catalog promotes client trust in record reliability with lineage tracking. You can build custom apps using the Qlik partner network and its open APIs.

The vendor offers annual subscriptions to the Business and Enterprise editions. A 30-day trial is available.

Get answers fast from Qlik’s Insight Advisor. Source

Product Overview

| User Sentiment Score | 85% |

| Analyst Rating | 90 |

| Company Size | S | M | L |

| Starting Price | $30/user/month Source |

Refer to our Jumpstart platform to compare your shortlisted products feature-by-feature.

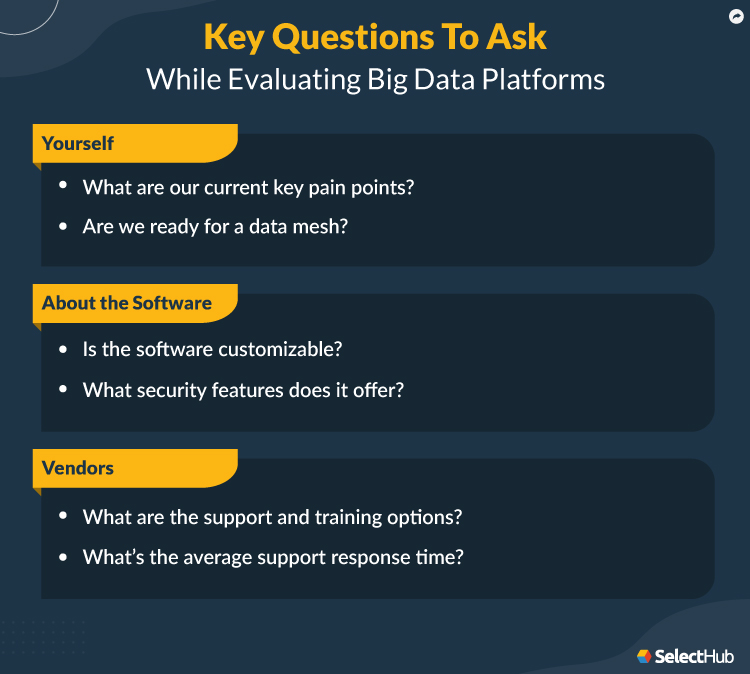

Questions To Ask Yourself

Use these questions as a starting point for internal conversations:

- What are our current key pain points?

- Which are the must-have big data platform features to address them?

- Which operational domains (HR, inventory, project planning) should integrate with the new system?

- Are we ready for a data mesh architecture?

Questions To Ask Vendors

Learn more about vendors and their offerings by adding these questions to your list.

About the Software

- Is the software customizable?

- What security features does it offer?

- How does the solution integrate with other systems?

- Is it compatible with my tech stack?

About the Vendor

- What business sizes do you work with?

- What are the support and training options?

- How long does onboarding take?

- What’s the average support response time?

Next Steps

Expecting your legacy systems to scale with your big data needs is like playing with a losing hand —you’ve got the wrong cards. To win, choose a data platform that handles your big data end-to-end and supports advanced analytics with machine learning.

Define your business needs with our free requirements checklist and get started on your software search. All the best!

Product Comparisons

Additional Resources

Products found for Big Data Platforms

Hadoop

Apache Hadoop is an open source framework for dealing with large quantities of data. It’s considered a landmark group of products in the business intelligence and data analytics space, and is comprised of several different components. It functions on basic analytics principles like distributed computing, large data processing, machine learning and more. Hadoop is part of a growing family of free, open source software (FOSS) projects from the Apache Foundation, and works well in conjunction with other third-party products.

Domo

Domo is a cloud-based business management suite that accelerates digital transformation for businesses of all sizes. It performs both micro and macro-level analysis to provide teams with in-depth insight into their business metrics as well as solve problems smarter and faster. It presents these analyses in interactive visualizations to make patterns obvious to users, facilitating the discovery of actionable insights. Through shared key performance indicators, users can overcome team silos and work together across departments.

Cloudera

Cloudera is a multi-environment analytics platform powered by integrated open source technologies that help users glean actionable business insights from their data, wherever it lives. With an enterprise data cloud, it puts data management at analysts’ fingertips, with the scalability and elasticity to manage any workload. It offers users transparency into the whole data lifecycle and the flexibility of customization through its open architecture. It is available on an annual subscription basis with three offerings: CDP Data Center, Enterprise Data Hub and HDP Enterprise Plus. Each edition offers different components and pricing varies based on computing power, storage space and number of nodes. The company merged with Hortonworks in 2019 to provide a comprehensive, end-to-end hybrid and multi-cloud offering.

Redshift

Amazon Redshift is a cloud-based data warehouse service that enables enterprise-level querying for reporting and analytics. It supports an unlimited number of concurrent queries and users through its high-performing Advanced Query Accelerator (AQUA). Scalable as needed, it retrieves information faster through massive parallel processing, columnar storage, compression and replication. Data analysts and developers leverage its machine learning attributes to create, train and deploy Amazon Sagemaker models.Enterprises can glean actionable insights through its integration with the AWS ecosystem. Data sharing is enabled across all its clusters, eliminating the need to copy or move data. It offers data security through SSL and AES-256 encryption with granular access controls to ensure that users have access to only the information that they need.

Hortonworks

Hortonworks Data Platform is an open-source data analysis and collection product from Hortonworks. It is designed to meet the needs of small, medium and large enterprises that are trying to take advantage of big data. The company was acquired by Cloudera in 2019 for $5.2 billion. HDP has a number of features that help it process large enterprise-level volumes, including multi-workload processing, batch processing, real-time processing, governance and more.

Talend

Talend is an open-source data integration and management platform that enables big data ingestion, transformation and mapping at the enterprise level. The vendor provides cross-network connectivity, data quality and master data management in a single, unified hub – the Data Fabric. Based on industry standards like Eclipse, Java and SQL, it helps businesses create reusable pipelines – build once and use anywhere, with no proprietary lock-in.The open-source version is free, with the cloud data integration module available for a monthly and annual fee. The price of Data Fabric is available on request.

SAP HANA

SAP HANA is the in-memory database for SAP’s Business Technology platform with strong data processing and analytics capabilities that reduce data redundancy and data footprint, while optimizing hardware and IT operational needs to support business in real time. Available on-premise, in the cloud and as a hybrid solution, it performs advanced analytics on live transactional data to display actionable information. With an in-memory architecture and lean data model that helps businesses access data at the speed of thought, it serves as a single source of all relevant data. It integrates with a multitude of systems and databases, including geo-spatial mapping tools, to give businesses the insights to make KPI-focused decisions.

Vertica

Vertica is an analytics and data exploration platform designed to ingest massive quantities of data, parse it, and then return business insights as reports and interactive graphics. Elastically scalable, it provides batch as well as streaming analytics with massively parallel processing, ANSI-compliant SQL querying and ACID transactions. Deployable in the cloud, on-premise, on Apache Hadoop and as a hybrid model, its resource manager enables concurrent job runs with reduced CPU and memory usage and data compression for storage optimization. A serverless setup and advanced data trawling techniques help users store and access their data with ease.

AgilOne

AgilOne is a data exploration and analysis product that is built from the ground up to handle vast quantities of data from a variety of sources. Utilizing machine learning, self-service BI, built-in calculations and more, it’s able to parse, prepare and analyze massive amounts of information. It’s ideal for small to medium-sized businesses.

Actian

Actian is a cloud data management platform that enables data integration with fully managed warehousing, transformation and analytics for enterprises. It integrates with various cloud-based and on-premise technologies and services. With massively parallel processing and data compression on the back end, its embedded analytical engine works in tandem with a robust RDBMS, complementing its computing with built-in user-defined functions to process online transactions at scale.Part of the Cloud Security Alliance, the vendor consistently updates their best practices to ensure the security of its cloud services. It offers a 30-day free trial. Pricing is available on request.

1010data

1010data is a market intelligence and enterprise analytics solution that helps track consumer insights and market trends. In addition to vendor-critical insights, it provides brand performance metrics to buy-side entities. Seamlessly embeddable, it can also function as a standalone private-label option. Data scientists and statisticians leverage its integration with R to view and query data tables.It enables analytics development through its QuickApps framework. By tracking consumer spending trends and brand performance, it enables businesses to better position their products in the marketplace.

TrendMiner

TrendMiner is a well-known software application that ranks 116 among all Manufacturing Software according to our research analysts. TrendMiner can be deployed in the cloud and on-premise and is accessible from a few platforms including Windows devices.

Microsoft Machine Learning Server

Microsoft Machine Learning Server is an AI-enabled enterprise intelligence solution with big data capabilities. With full R and Python support, it produces predictive and retrospective analytics, with the ability to score structured and unstructured data. It is fully machine learning enabled, with options to train or use prebuilt trained models. It integrates with major open-source resources and other Microsoft entities like Azure. Its web service publishing and operationalization features streamline putting insights to work.

Pivotal Big Data Suite

The Pivotal Big Data Suite is an integrated solution that enables big data management and analytics for enterprises. It includes Greenplum, a business-ready data warehouse, GemFire, an in-memory data grid and Postgres which helps deploy clusters of the PostgreSQL database. With a data architecture built for batch as well as streaming analytics, it can be deployed on-premise and in the cloud, and as part of Pivotal Cloud foundry.Since 2019, Pivotal has been owned by VMWare. Besides an annual subscription, the vendor offers pricing by core for its computing resources. It is compatible with open data platform (ODP) versions of Hadoop and works with Apache Solr to provide text analytics.

Muddy Boots Software

Call SelectHub for a free 15-minute selection analysis: 1-855-850-3850

Real People... with Data

We know selecting software can be overwhelming. You have a lot on the line and we want you to make your project a success, avoiding the pitfalls we see far too often.

As you get started with us, whether it be with Software Requirements templates, Comparing, Shortlisting Vendors or obtaining that elusive Pricing you need; know that we are here for you.

Our Market Research Analysts will take calls, and in 10 minutes, take your basic requirements and recommend you a shortlist to start with.

Narrow Down Your Solution Options Easily

closeApplying filters...

Search by what Product or Type or Software are you looking for